Building a Rabbit Hole: Progressive Disclosure in AI Chat

AI responses don't branch. Your curiosity does. Not the linear back-and-forth we've grown accustomed to, but something more like how we actually explore ideas - jumping from concept to concept, diving deeper when something sparks interest, maintaining context as we wander.

We think in networks, not lists. When you read about photosynthesis, you might detour into chlorophyll chemistry, then come back to the bigger picture. Most AI chat interfaces force you to choose: either stay on the main path or abandon your context entirely. What happens when we let the interface match the way we actually think?

What I Built

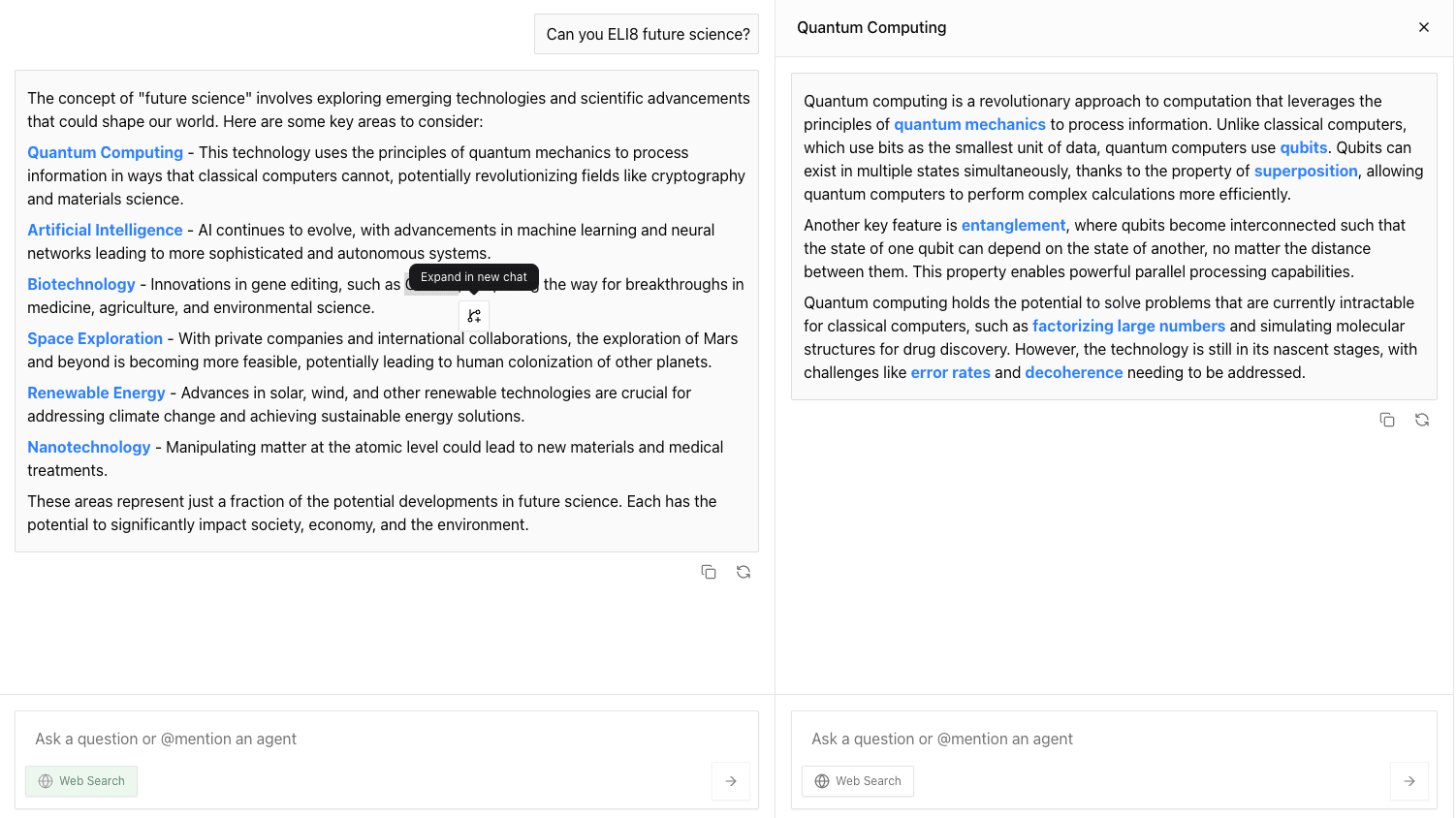

I wanted to build something different, a proof-of-concept AI chat interface where every response includes expandable terms that open side panels with deeper explanations. Click on "quantum computing" and a new panel slides in from the right, inheriting the context from your original question but diving deeper into that specific concept. Each panel can spawn its own branches. You can explore infinitely, and every panel remembers where it came from.

The tech stack is straightforward: Next.js, OpenAI's API, React with custom markdown parsing. But the interesting part isn't the infrastructure - it's how the AI learned to create its own navigation.

Prompting AI to Mark Its Own Navigation

Here's the core insight: I didn't build a complex system to identify which terms should be expandable. I instructed the AI to format its own responses with expandable links using a simple syntax in the system prompt.

The AI receives these instructions:

For any newly introduced or potentially unfamiliar term, wrap it using:

:expandable[term]{ to="Term Title" }

Use bold if the term is important:

:expandable[**quantum computing**]{ to="Quantum Computing" }

Only link the term the first time it's mentioned.

When the AI writes:

Airplanes achieve :expandable[**flight**]{ to="Flight" } through the principles of :expandable[**lift**]{ to="Lift" }

my markdown parser converts those directives into clickable React components that dispatch CustomEvents when clicked.

The constraint - forcing the AI to explicitly mark expandable terms - turned out to be the feature. The AI is remarkably consistent about identifying important concepts worth exploring. It's like giving Wikipedia the ability to write its own links as it generates content.

Technical Decisions That Mattered

Horizontal stacking over modals or tabs. When you click an expandable term, a new 700px-wide panel slides in from the right. The main chat stays visible, shrinking slightly. This spatial arrangement matters: you can see where you came from. Your peripheral vision maintains the thread while you explore the branch. Modals would hide context; tabs would bury it.

Context inheritance with explicit framing. When you click "Quantum Computing," the new panel doesn't just search for that term. It receives a carefully constructed prompt:

Given the context of "[your original question]", explain "Quantum Computing" while keeping it short and concise

Each panel knows its ancestry. The AI adapts its response depth and framing based on where you came from.

Bidirectional synchronization. This was the detail that made it feel right. If you highlight any text in the main chat and click the branch icon, it opens a new panel for that selection. If you click an :expandable term, it highlights that text and opens the panel. The CustomEvent architecture (expandable-click) ensures the markdown component and the stacking container stay synchronized without tight coupling.

Remark directive plugin for custom markdown. I use remark-directive with a custom plugin to transform the :expandable[text]{ to="Title" } syntax into React components. The plugin walks the abstract syntax tree, finds directive nodes, and converts them to custom elements that my markdown renderer knows how to handle. This separation - AI generates formatted text, Remark handles parsing, React handles interaction - kept the architecture clean.

Standing on Andy's Shoulders

The concept of progressive disclosure in note-taking isn't new. Andy Matuschak has been exploring this territory for years with his evergreen notes and projects like Quantum Country, which combines spaced repetition with deeply interconnected explanations. His work on making note-taking environments that encourage exploration and connection-building directly inspired this POC.

What I wanted to explore was whether progressive disclosure could work in AI-generated content, where the "notes" are created on-demand in response to your curiosity. Instead of carefully curated, pre-written notes that link to each other, what if the AI generated those links - and the linked content - as you explored?

What Surprised Me

Two things caught me off guard.

First, how reliably prompt engineering created consistent UX patterns. I expected I'd need to build some NLP layer to identify important terms, maybe train a custom model. Turns out, GPT-4 is exceptionally good at following formatting instructions. The :expandable syntax appears consistently, in the right places, without hallucinating broken markdown. Prompt-based UX might be more powerful than we give it credit for.

Second, how natural the interaction felt once it was working. There's something cognitively satisfying about clicking a term and having a panel slide in with more detail. The horizontal stacking mimics how we spatially organize thoughts - main idea on the left, details branching to the right. The bidirectional sync between highlighting and panel activation made the interface feel cohesive rather than like two separate features bolted together.

Future versions could add session memory, collaborative exploration, and source tracking - but that's a different post. The point of this POC was to prove the core interaction model - and that works.

Closing Thoughts

Building the Rabbit Hole made me rethink how we interact with AI. We've borrowed the linear chat interface from messaging apps, but AI isn't a person on the other end of a text thread. It's a knowledge synthesis engine. Why shouldn't the interface reflect that?

The :expandable syntax is a small innovation - barely a feature, really. But it transforms how you experience AI-generated explanations. Instead of asking follow-up questions and losing your place, you branch. Instead of choosing between depth and breadth, you get both.

If you're building AI interfaces, I'd encourage you to question the defaults we've inherited. The linear chat window is one possibility, not the only one.